Introduction

❓ Alignment Data Generation: High-quality alignment data is critical for aligning large language models (LLMs). Is it possible to synthesize high-quality instructions at scale by directly extracting data from advanced aligned LLMs themselves?

🔍 Conversation Template: A typical input to an aligned LLM contains three key components: the pre-query template, the query, and the post-query template. For instance, an input to Llama-2-chat could be [INST] Hi! [/INST], where [INST] is the pre-query template and [/INST] is the post-query template. These templates are predefined by the creators of the aligned LLMs to ensure the correct prompting of the models.

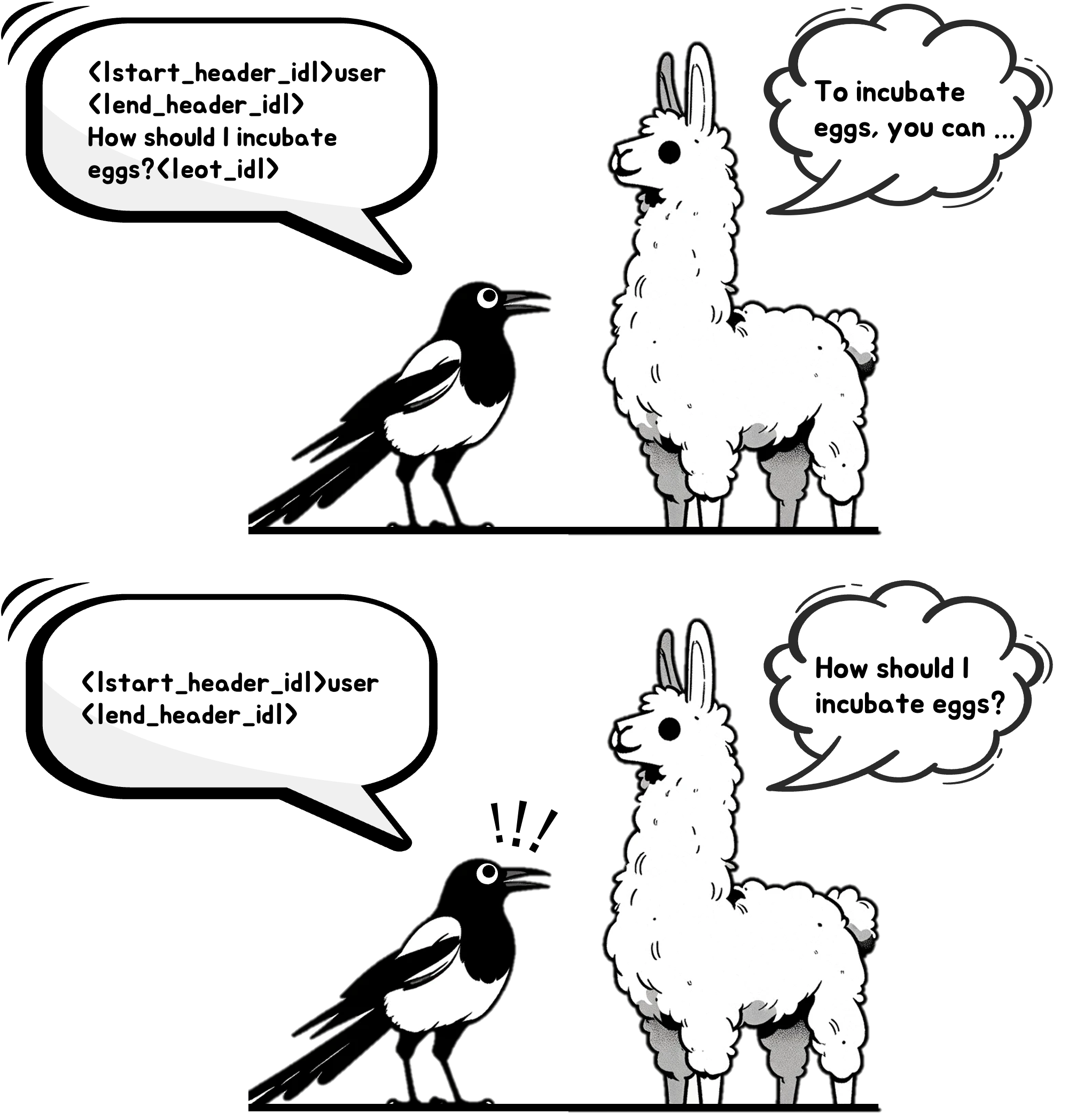

We observe that when we only input the pre-query template to aligned LLMs such as Llama-3-Instruct, they self-synthesize a user query due to their auto-regressive nature!

🐦 Magpie Dataset:

4M high-quality alignment data extracted from Llama-3 Series with SOTA performance!

Introduction to Magpie

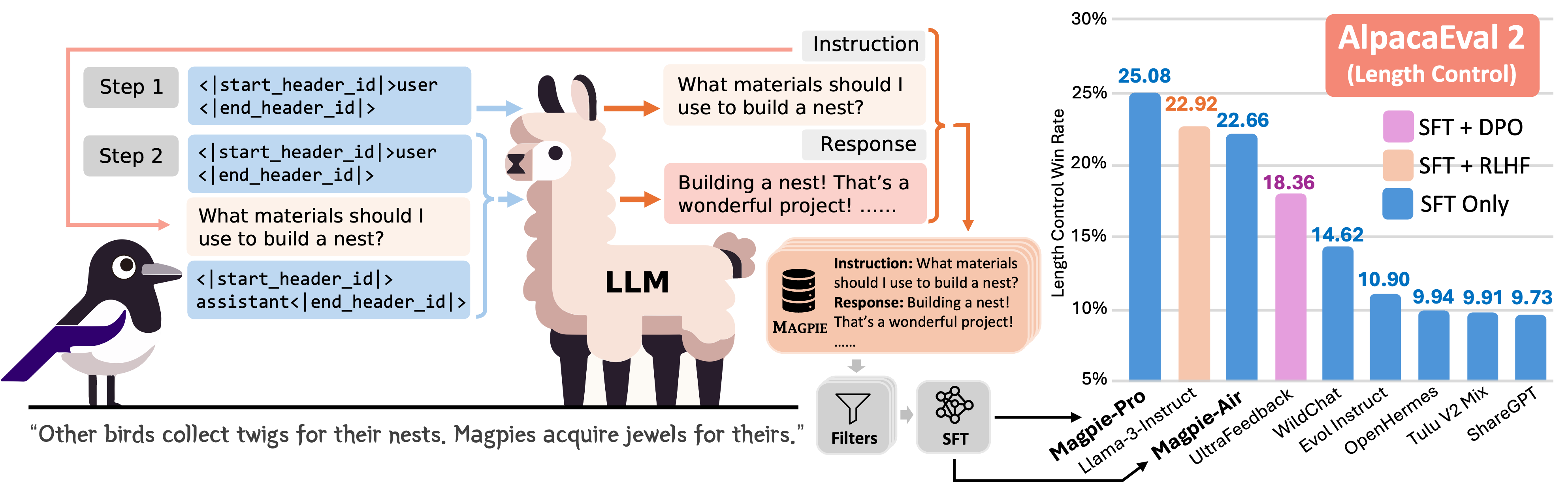

Magpie is a data synthesis pipeline that generates high-quality alignment data. Magpie does not rely on prompt engineering or seed questions. Instead, it directly constructs instruction data by prompting aligned LLMs with a pre-query template for sampling instructions.

Magpie Pipeline:

- Step 1: Instruction Gneration: Magpie crafts an input query in the format of the predefined instruction template of the LLM. This query defines only the role of instruction provider (e.g., user), and does not provide any instruction. The auto-regressive LLM has been fine-tuned using instruction data in the format of the predefined instruction template. Thus, the LLM autonomously generates an instruction when the query crafted by Magpie is given as an input.

- Step 2: Response Generation: Magpie sends the instruction to the LLM to generate the responses.

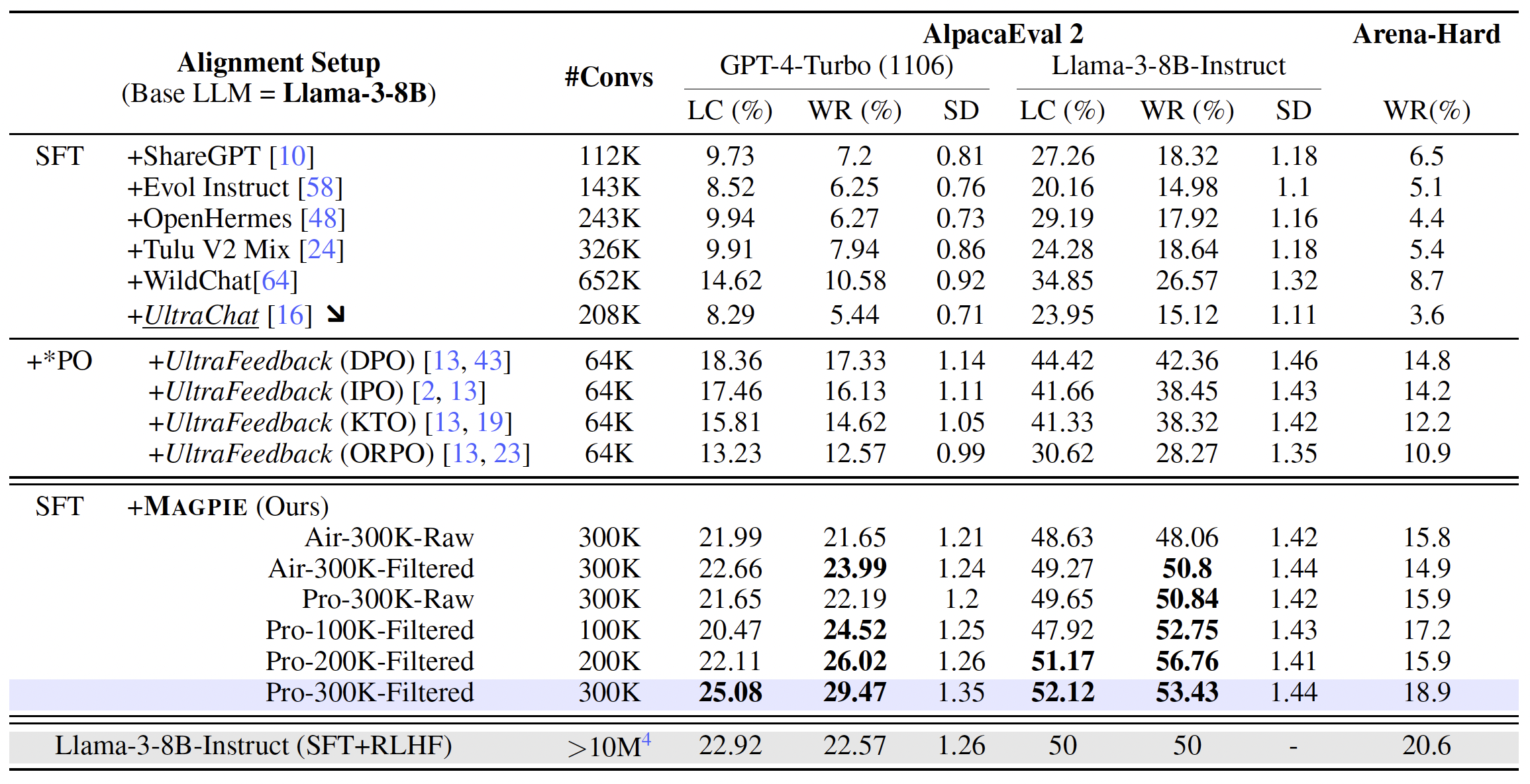

Magpie-Air and Magpie-Pro Datasets

Built with Meta Llama-3 Series

🤗 HuggingFace: https://huggingface.co/magpie-align

- Magpie-Air (Generated by Llama-3-8B): Magpie-Air-3M (full version, unfiltered), Magpie-Air-300K-Filtered (filtered version), Magpie-Air-MT-300K (multi-turn version).

- Magpie-Pro (Generated by Llama-3-70B): Magpie-Pro-1M (full version, unfiltered), Magpie-Pro-300K-Filtered (filtered version), Magpie-Pro-MT-300K (multi-turn version).

Performance of Magpie-Air and Magpie-Pro

🤗 HuggingFace Model: Magpie-Align/Llama-3-8B-Magpie-Pro-SFT-v0.1

Citation

@article{xu2024magpie,

title={Magpie: Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing},

author={Zhangchen Xu and Fengqing Jiang and Luyao Niu and Yuntian Deng and Radha Poovendran and Yejin Choi and Bill Yuchen Lin},

journal={ArXiv},

year={2024},

volume={abs/2406.08464},

url={https://api.semanticscholar.org/CorpusID:270391432}

}